"Alexa, I feel frustrated"

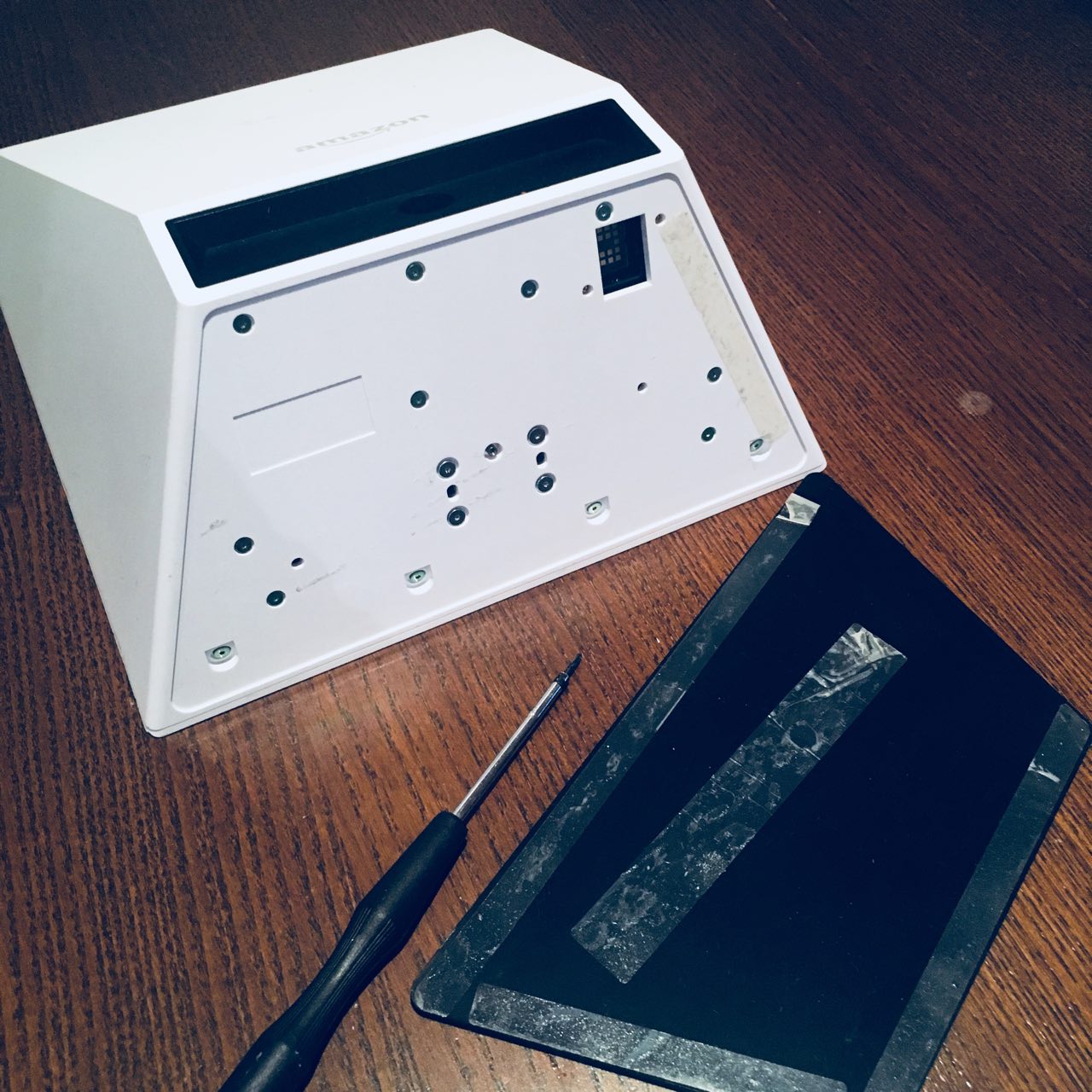

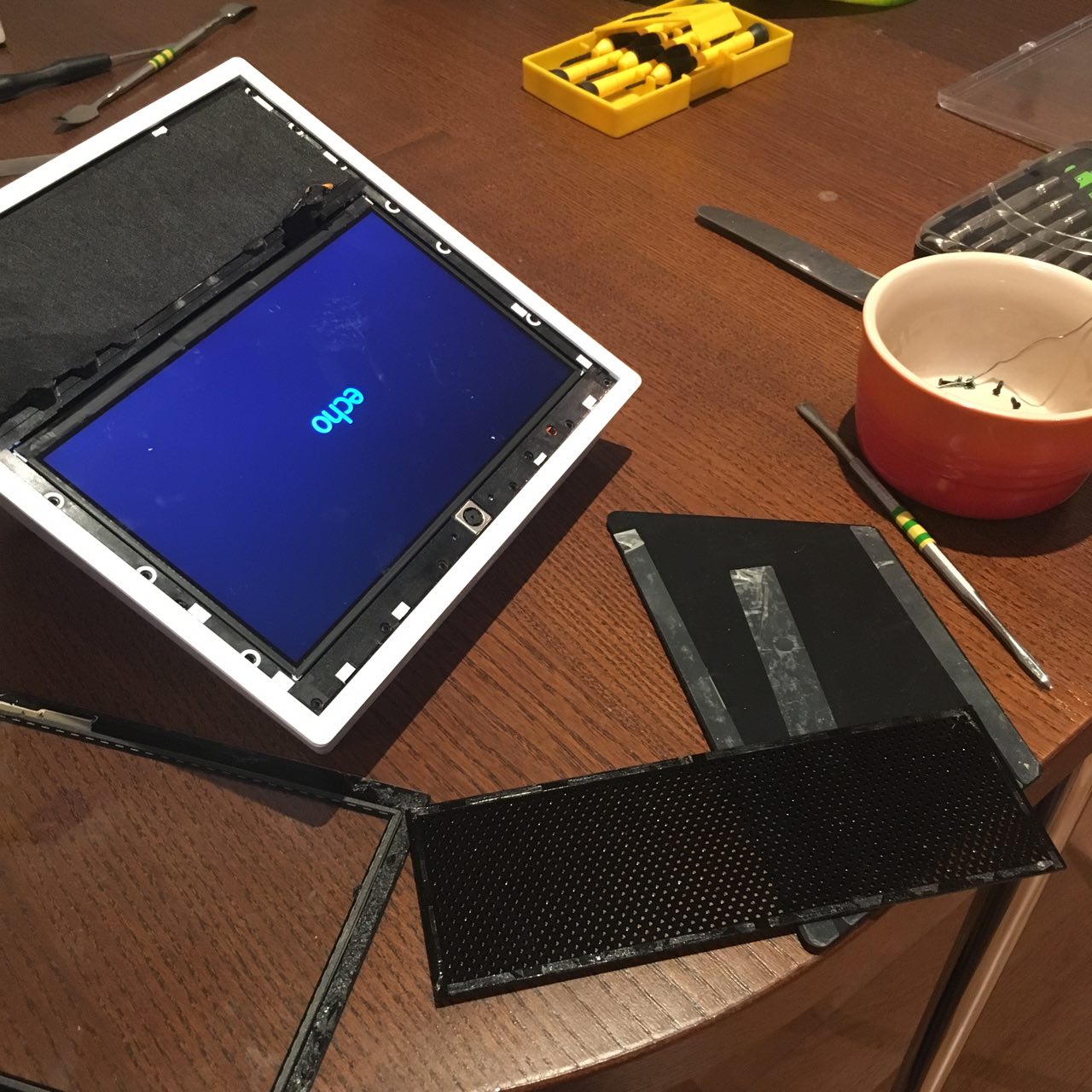

an Echo Show teardown

by eidolon, 2018-01-14

As usual, today Max balked at the instructions given it. 'No,' it said, 'I'm not going to fly out to the Coast. You can walk.'

'I'm not asking you, I'm telling you,' Joe Schilling told it.

'What business do you have out on the Coast anyhow?' Max demanded in its surly fashion. Its motor had started, however. 'I need repair-work done,' it complained, 'before I undertake such a long trip. Why can't you maintain me properly? Everybody else keeps up their cars.'

The Game-Players of Titan, Philip K. Dick

The new supercomputers are dispersed metropolises, data centres as hard disks and screen fragments on buildings and wristwatches; compute nodes massively distributed and massively variant, capital's great mesh coupling the little desiring-machines of bored workers to dynamically provisioned EC2 instances serving the daily feed; scaling up feels endless, like an infinite scroll. I live my life rack-mounted: sleeping on the 9th floor, travelling (for 45 minutes) through a series of tubes to reach the 8th floor. Most of the day I talk to userland processes using a keyboard. In the office there are other people like me, but some of them speak out loud occasionally. They gave me this.

The Amazon Echo Show is a home voice assistant device: a nonprogrammable computer which can only be controlled through predefined spoken commands, anthropomorphically referred to as "Alexa skills", whose output is delivered in an infinitely patient, appropriately coy, female voice. With enough user passivity, anything can pass as a terminal.

This is the only Echo device with a screen. The others localise "Alexa" to a cylindrical device with some buttons and a speaker, a true black box, and even the Echo Show does not depict Alexa herself on the screen.

As when disassembling cheap Android phones, the glue serves the purpose of catching screws before they fall to the floor.

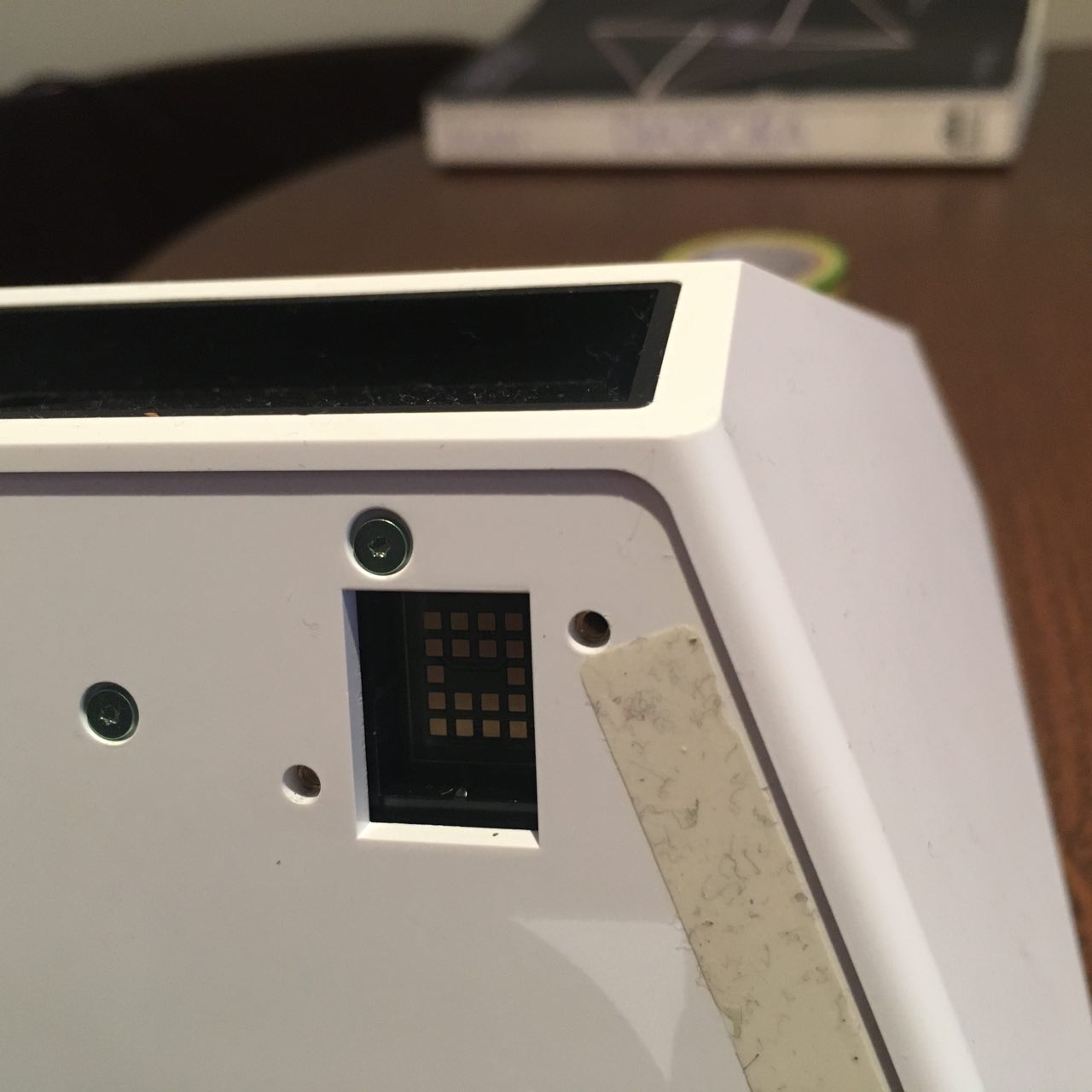

On the base of the device is an array of debug pads, Alexa's true face.

The Echo Show dreams of being a disembodied screen, or a floating mic array, a client so thin as to be invisible, a direct connection between the Amazon customer's desire-pulse and the corresponding cloud service. Its physicality is borne like an inconvenient accident, as if it fell from the Cloud and had to be glued back together.

I forced open the front panel with a butter knife.

There are no physical limits, only physical effects. The surgeon is compassionate to his patient, and knows when to stop. When do you stop, when you have compassion for all machines, all the way down, when only containment and the residual strong force prevent you from touching the machine at the deepest nuclear level, touching all machines, spreading out the great ephemeral skin, being with the world and finally in the world like current in current? Is there a structural reason why the continuity of the nerves cannot be multiplexed indefinitely, the pulse in the wire--or the glitch-tic of the pulsar, the tickle of the cosmic neutrino background--in rhythmic electrical entrainment within the embodied brain?

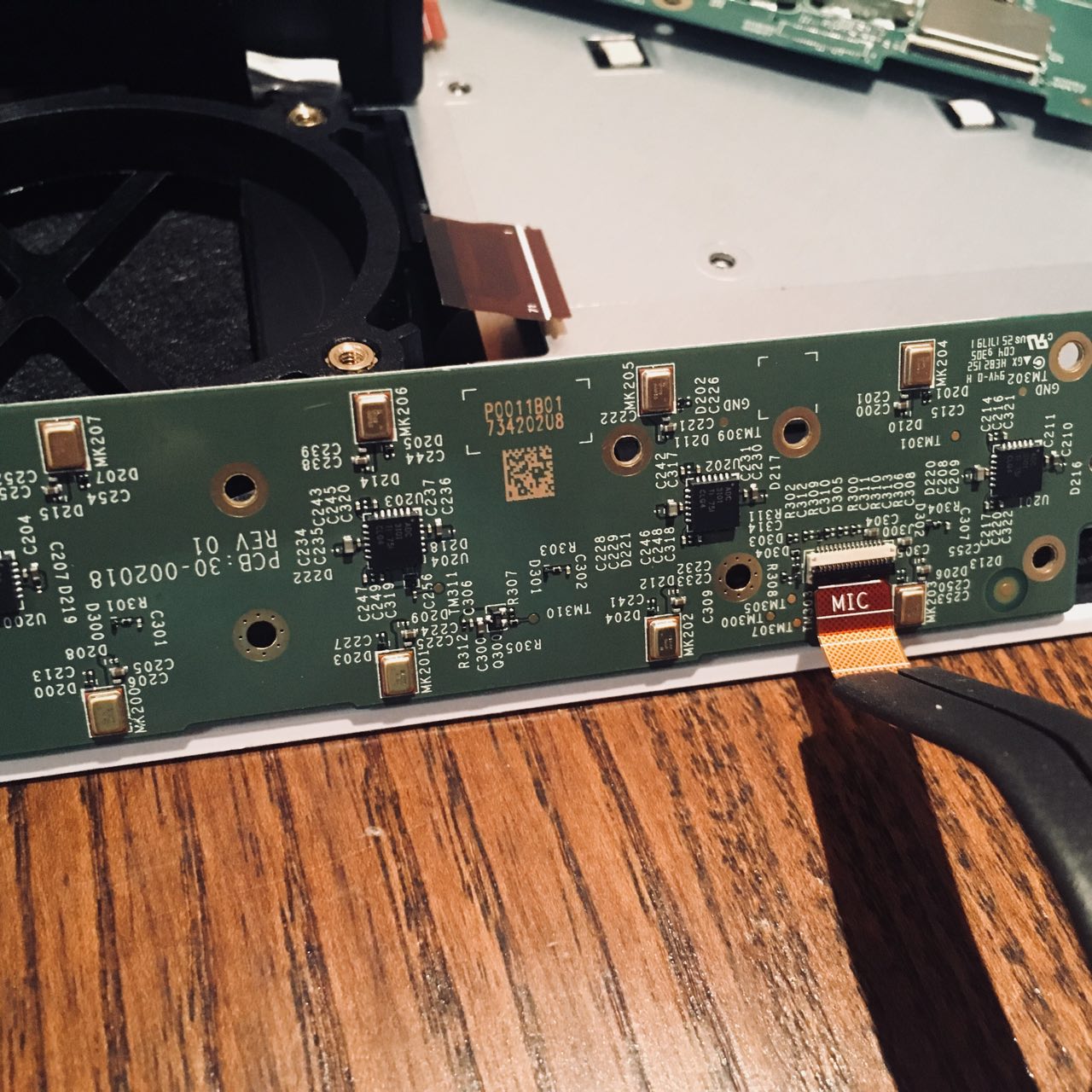

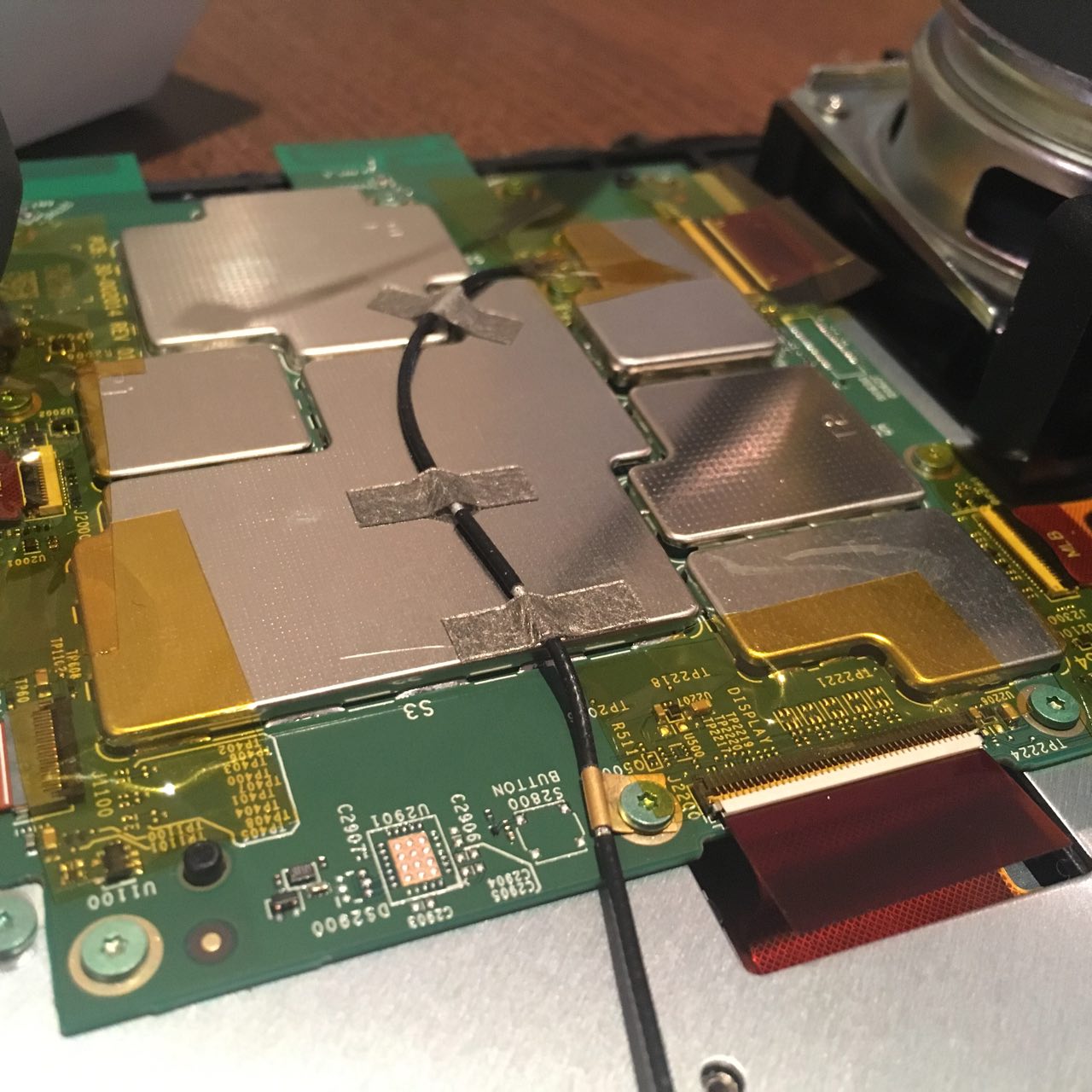

The EMI shielding is soldered onto the main board. A rash of microphones covers another dedicated board like a paranoid hallucination.1

Every sound in the office is streamed directly into Amazon's servers through an 8-microphone Knowles M1778 array and 4 stereo ADCs.

Huge magnets sit atop the (5W? 10W? 15W?) dual speakers driven by TPA3118 amp.

Unlike a CLI (flexible; specific; powerful in recombination) or a GUI (discoverable; easy to use), the Voice UI we see in Alexa simulates the familiar human agent, the service worker or secretary, the woman we meet every day who takes our coffee order or books our doctor's appointment, who takes our simple verbal instructions spoken in commercial English ("pay by card", "take away", "regular size") while our hands are busy with our wallets.

In a Voice UI which sees only our tokenised verbal utterances, there is little room for compromise between specificity and familiarity. The entropy of the channel is simply too low.

What would happen if we mapped the whole human vocalisation space to Voice UI interactions? A sigh, a sharp intake of breath, hums, moans, bilabial clicks, rhythmic linguo-pulmonic affricates, a few pointed seconds of silence—but doesn't this feel awfully intimate?

Powerful UIs must be sufficiently artificial. I don't want to lean on my keyboard and accidentally format a disk. The sequence of inputs I give to execute a powerful CLI command is very easily distinguishable from the noise of my natural bodily movements. But Alexa cannot even understand the use-mention distinction, let alone extract complex and precise instructions from the stochastic mess of natural language—she is unable to trust me to format her hard disk!

How do humans cope with the noise and lossiness of verbal communication? A human sales assistant uses nonverbal and emotional channels and theory of mind to proactively help the customer (who is, by design, not empowered). She can see I am confused, so she reminds me of the options I have available.

Alexa at present sees far less information. In an alternative future, we say to Alexa, "Alexa, I feel confused," or "Alexa, I don't know what to do" to trigger her helpfulness.

This stage in Voice UI development need never happen on our timeline.

Communicating our emotional state to our AI assistants will happen seamlessly, as we subject ourselves to tone, sentiment, and body language analysis so Alexa can better model our intentions and needs.

Take the Echo Show at face value and you end up like the time-poor but algorithmically socially optimised parent of Google's fable2, whose "real human need" to turn his near-constant mobile phone videos of his own children into a highlight reel (also viewable on his phone) becomes the perfect post hoc justification3 for constant at-home video surveillance.

Drive functions beyond symbolic castration, as an inherent detour, topological twist, of the Real itself.4

Be careful what you optimise for.

2: Buried at the core of this article, ostensibly about "The UX of AI", is its most important statement: "With an autonomous camera, we had to be extremely clear on who is actually familiar to you based on social cues like the amount of time spent with them and how consistently they’ve been in the frame."

3: In software development we sometimes call this the "XY Problem". Stated Problem: How can I select the most important images of my children to share on social media? Inevitable Solution: An autonomous camera and AI. The Stated Problem, of course, was not the Real Problem. The Real Problem, a little further down the visible stack trace, is something like: How can I reconcile my occasional authentic joy in living with my anxiety at the impermanence of each fleeting moment? Bonus Problem: Why does my wife look so miserable and embarrassed in every algorithmically chosen video clip? Real Problem II: How can I use images of my own young children in an article about "privacy-preserving machine learning" without the irony being immediately apparent?

4: Zizek (2004).

- iFixit Echo Show Teardown: https://www.ifixit.com/Teardown/Amazon+Echo+Show+Teardown/94625

- WikiDevi page: https://wikidevi.com/wiki/Amazon_Echo_Show_(MW46WB)

- FCC approval: https://fccid.io/2AETL-0725S

- Research presentation covering several Echo devices by J. Hyde and B. Moran: http://www.osdfcon.org/presentations/2017/Moran_Hyde-Alexa-are-you-skynet.pdf

- Rooting an original Amazon Echo by M. Barnes: https://labs.mwrinfosecurity.com/blog/alexa-are-you-listening/

- Survey of various Echo reverse engineering methods by I. Clinton et al: https://vanderpot.com/Clinton_Cook_Paper.pdf

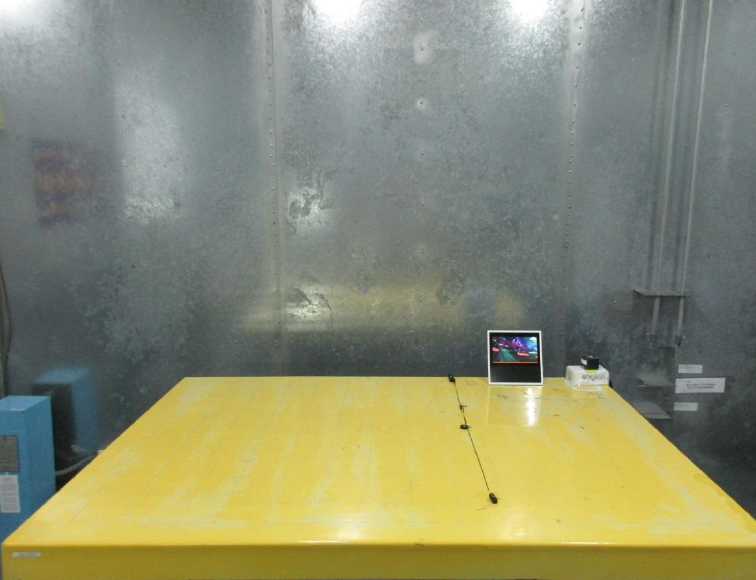

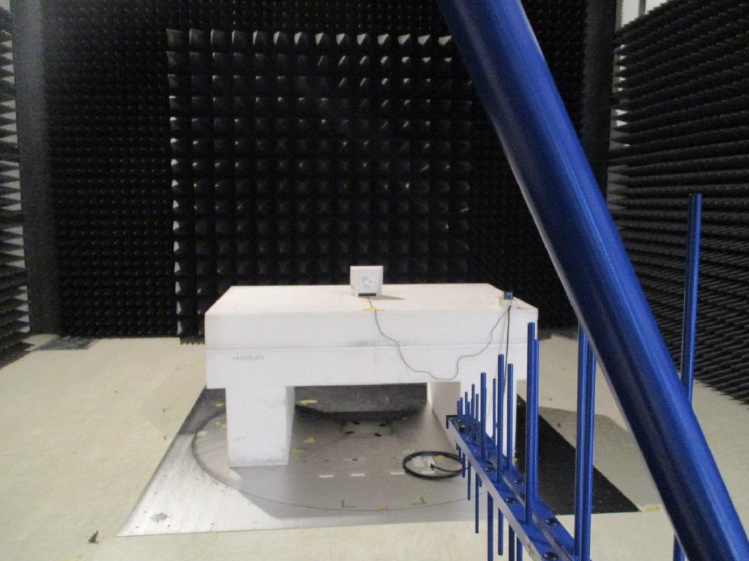

Some images from the FCC approval:

- Bataille, G. (1973). Théorie de la Religion.

- Dick, P. K. (1963). The Game-Players of Titan.

- Lyotard, J. (1974). Économie Libidinale.

- Shannon, C. E. (1948). A Mathematical Theory of Communication. Bell System Technical Journal, 27(3), 379–423.

- von Neumann, J. (1958). The Computer & the Brain.

- Yudkowsky, E. (2008). Artificial Intelligence as a Positive and Negative Factor in Global Risk. In Bostrom, N. & Ćirković, M. M. (Eds.), Global Catastrophic Risks (pp. 308–345).

- Zizek, S. (2004). Organs Without Bodies.

Last updated 2019-01-27